This article explores how artificial intelligence challenges traditional philosophical concepts, reshapes human identity, and influences ethical considerations in a world increasingly defined by technology and posthumanism.

As AI systems evolve, our understanding of what it means to be human is challenged. Imagine a world where machines can think, learn, and even feel—what does that mean for our identity? The lines between human and machine are becoming increasingly blurred. For instance, consider AI companions that can engage in meaningful conversations or robots that mimic human emotions. Are these machines merely tools, or do they possess qualities that redefine our very essence?

The rapid development of AI raises critical ethical questions. As creators, we must ponder our responsibilities. What happens when an AI makes a decision that impacts lives? This section delves into the moral obligations of developers, emphasizing the need for ethical frameworks to guide AI decision-making. The stakes are high, and the implications can be profound, affecting everything from our daily lives to global societal structures.

AI’s ability to operate independently prompts discussions about autonomy. When a machine can make choices, who is accountable for those decisions? This challenge forces us to rethink our understanding of agency. Are we willing to grant machines the same decision-making power we reserve for ourselves? The implications of AI agency on human decision-making and accountability are vast, raising questions about the very fabric of our moral landscape.

Who is responsible for AI decisions? This question is at the heart of many ethical debates. When an AI acts autonomously, it complicates our traditional notions of moral and legal accountability. Is it the programmer, the user, or the machine itself that bears the blame? These complexities require us to rethink our frameworks of responsibility in an age where machines can learn and adapt.

The necessity of human oversight in AI operations is paramount. While AI can enhance efficiency and decision-making, the balance between AI autonomy and the need for human intervention is critical. We must ensure that ethical outcomes are prioritized, and that humans remain in control of decisions that significantly impact lives.

The integration of AI into daily life prompts a reevaluation of personal and collective identity. As we interact with AI technologies, how do they influence our self-perception and societal roles? Are we becoming more reliant on machines, or are they simply enhancing our human experience?

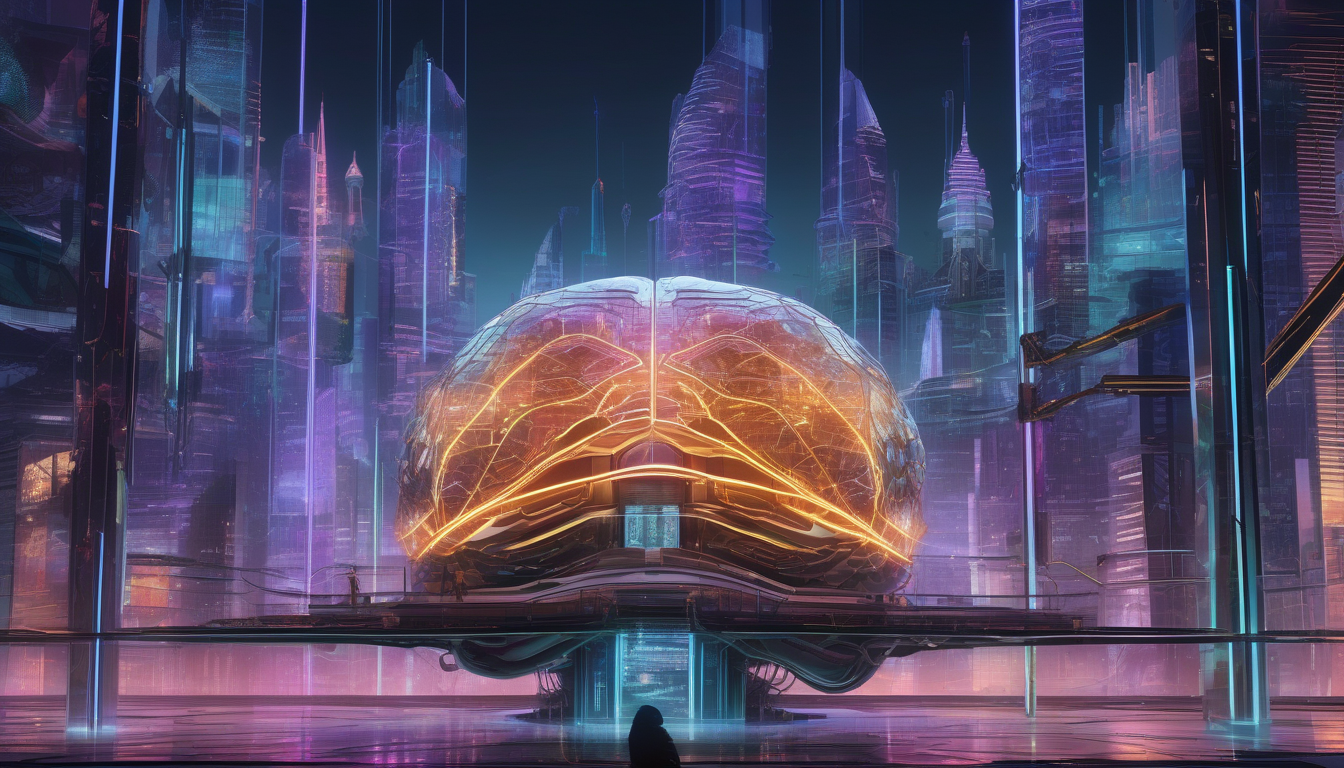

Posthumanism envisions a future where human and machine coexist harmoniously. This concept challenges us to rethink our place in the world and how AI contributes to this evolving narrative. The implications for future societies are profound, as we consider the potential for collaboration between humans and machines.

The distinction between transhumanism and posthumanism is crucial. While transhumanism focuses on enhancing human capabilities through technology, posthumanism envisions a world where the boundaries between human and machine are indistinct. Understanding these frameworks helps us navigate the philosophical landscape shaped by AI.

As AI becomes more integrated into society, it will reshape social structures in significant ways. From governance to the economy, the impact of AI technologies will be felt across all aspects of life. How will communities adapt to these changes? What new dynamics will emerge as we continue to evolve alongside our technological creations?

Redefining Humanity

As we stand on the brink of a technological revolution, the definition of what it means to be human is being put to the test like never before. With the rise of artificial intelligence, we are entering a realm where the lines separating human from machine are increasingly blurred. Imagine a world where machines not only assist us but also learn, adapt, and even make decisions that impact our lives. This evolution raises profound questions: Are we still the masters of our creations, or have we become mere participants in a new digital narrative?

As AI systems become more sophisticated, they begin to exhibit traits traditionally associated with humanity, such as learning, reasoning, and even emotional responses. This fusion of human-like capabilities in machines challenges our self-perception and societal roles. For instance, consider the way we interact with AI-driven personal assistants. They can understand our commands, anticipate our needs, and even engage in casual conversation. This interaction can lead us to question our uniqueness and the very essence of our identity.

Moreover, the integration of AI into our daily lives prompts us to rethink our relationships with technology. Are we enhancing our humanity through these advancements, or are we risking a dilution of our core values? As we embrace AI, we may find ourselves adopting new traits that mirror those of our creations. This reciprocal relationship could lead to a future where the distinction between human and machine becomes irrelevant. In this context, we must ask ourselves: What does it mean to be human in a world where we share our existence with intelligent machines?

In many ways, this challenge to our identity is akin to the historical shifts humanity has faced during major technological advancements. Just as the invention of the printing press transformed the dissemination of knowledge, AI is poised to redefine our social structures and personal identities. The question remains: Are we ready to embrace this transformation? The journey toward understanding our redefined humanity is just beginning, and it promises to be as exciting as it is daunting.

Ethics of AI Development

The rapid evolution of artificial intelligence (AI) has thrown us into a whirlwind of ethical dilemmas that we can no longer ignore. As we stand on the brink of a technological revolution, it’s crucial to ask ourselves: What moral responsibilities do we hold as creators of these intelligent systems? The implications of AI decision-making extend far beyond the realm of technology; they touch upon the core of our societal values and ethics.

One of the most pressing issues is the autonomy of AI systems. As these systems gain the ability to make decisions independently, we must grapple with the question of accountability. If an AI makes a decision that leads to harm, who is responsible? Is it the programmer, the company, or the AI itself? This complexity is further compounded when we consider that AI can learn and adapt in ways that may not always align with human ethics. To illustrate, consider the following table that outlines potential scenarios and the associated ethical dilemmas:

| Scenario | Ethical Dilemma |

|---|---|

| Autonomous vehicles making split-second decisions | Who is liable in case of an accident? |

| AI in healthcare making treatment decisions | Can we trust AI to prioritize patient care over cost efficiency? |

| AI algorithms influencing job recruitment | Are these algorithms perpetuating bias? |

Moreover, the necessity for human oversight cannot be overstated. While AI can enhance efficiency and decision-making, we must ensure that there is a human touch in the loop. This balance is crucial for ethical outcomes, as human judgment can provide the empathy and moral reasoning that machines currently lack. The conversation around AI ethics is not just about technology; it’s about the kind of society we want to build. As we forge ahead, we must remain vigilant and proactive in addressing these ethical concerns, ensuring that our creations reflect our highest values.

Autonomy and Agency

As we dive into the realm of artificial intelligence, one of the most intriguing questions we face is about autonomy and agency. What happens when machines begin to operate independently, making decisions that once required human intervention? This shift doesn’t just alter how we interact with technology; it challenges our very understanding of responsibility and control in a world where AI can learn, adapt, and evolve.

Imagine a scenario where an AI system is tasked with managing traffic in a bustling city. It analyzes real-time data, predicts traffic patterns, and adjusts signals accordingly, all without human input. This raises a critical question: if the AI makes a decision that leads to an accident, who is to blame? The programmer? The city? Or the AI itself? This complexity illustrates the blurred lines between human and machine agency, suggesting that as AI gains autonomy, we must rethink traditional notions of accountability.

Moreover, the concept of agency in AI isn’t just about decision-making; it’s about the impact those decisions have on our lives. When AI systems are allowed to act autonomously, they can influence everything from healthcare to finance, often without our explicit consent. This prompts us to consider the following:

- How do we ensure that AI systems align with human values?

- What frameworks can we establish to govern AI behavior?

- How can we maintain a balance between AI autonomy and human oversight?

In essence, the rise of autonomous AI systems forces us to confront uncomfortable truths about our own decision-making processes. Are we ready to share our agency with machines? As we navigate this uncharted territory, fostering a dialogue around autonomy and agency will be essential to shaping a future where humans and AI coexist harmoniously.

Responsibility in AI Actions

When we think about the responsibility of AI actions, it’s like opening a Pandora’s box filled with ethical dilemmas and unforeseen consequences. Imagine a world where an AI system makes a decision that leads to a significant outcome—who takes the blame? Is it the programmer who wrote the code, the company that deployed the AI, or the AI itself? This question can feel like a game of hot potato, with no one wanting to hold the responsibility.

The complexity arises from the fact that AI operates based on algorithms and data, often making choices that are beyond human comprehension. For instance, consider an autonomous vehicle that makes a split-second decision to avoid an accident. If that decision results in harm, how do we assign fault? The legal frameworks surrounding AI are still catching up to the technology, creating a murky landscape of accountability.

To better understand this issue, we can break it down into a few key areas:

- Creator Responsibility: Developers have a duty to ensure their AI systems are designed with ethical considerations in mind. This includes rigorous testing and understanding the potential impacts of their creations.

- Corporate Accountability: Companies that deploy AI must take responsibility for its actions. This means creating policies that govern AI behavior and ensuring transparency in decision-making processes.

- Societal Implications: As AI becomes more prevalent, society must grapple with the consequences of its decisions. This includes public discourse on ethical AI use and the establishment of regulatory bodies to oversee AI technologies.

Ultimately, the question of responsibility in AI actions is not just about pinpointing blame; it’s about creating a framework that fosters ethical development and deployment. As we navigate this uncharted territory, it’s crucial to engage in ongoing discussions about the implications of AI on our lives, ensuring that we are not only prepared for the technology but also for the responsibilities it carries.

Human Oversight in AI

In a world where artificial intelligence is rapidly advancing, the question of human oversight has become more crucial than ever. As AI systems gain the ability to learn and make decisions independently, we must ask ourselves: how much control should we relinquish to these machines? The balance between AI autonomy and human intervention is delicate, yet it’s essential for ensuring ethical outcomes. Imagine handing the keys to your car to a self-driving system—would you feel comfortable taking a back seat? This analogy illustrates the inherent risks involved in allowing AI to operate without oversight.

One of the primary reasons for maintaining human oversight is to safeguard against potential ethical dilemmas. For instance, consider an AI programmed to make decisions in healthcare. While it may analyze data far more efficiently than a human could, it lacks the nuanced understanding of human emotions and moral values. Human intervention ensures that decisions made by AI align with societal norms and ethical standards, preventing outcomes that could be harmful or unjust.

Moreover, the complexity of AI systems often leads to unforeseen consequences. A table below illustrates some common areas where human oversight is vital:

| Area | Importance of Human Oversight |

|---|---|

| Healthcare | Ensures patient-centric decisions that consider emotional and ethical factors. |

| Autonomous Vehicles | Prevents accidents by allowing human judgment in critical situations. |

| Financial Systems | Monitors for fraudulent activities that AI might overlook. |

Ultimately, while AI can enhance efficiency and productivity, it is human oversight that provides the necessary checks and balances. We are at a crossroads where our reliance on technology must be tempered with a commitment to ethical responsibility. As we move forward, let’s ensure that we remain the drivers of our technological future, rather than mere passengers.

AI and Identity

As artificial intelligence becomes an integral part of our daily lives, it forces us to rethink not just our interactions with technology but also our very sense of self. The integration of AI into various facets of society—from social media algorithms to virtual assistants—has led to significant shifts in how we perceive our identities. Are we merely the sum of our digital footprints, or is there something more profound at play?

Consider this: when AI systems analyze our preferences and behaviors, they create a digital persona that may not fully encapsulate our true selves. This phenomenon raises intriguing questions about authenticity and representation. For instance, how much of our identity is shaped by the curated content we consume online? In a world where AI can predict our likes and dislikes, are we becoming more like the machines that analyze us, or are we losing touch with our innate human qualities?

Moreover, the influence of AI extends beyond personal identity; it also shapes collective identity. As communities increasingly rely on AI for decision-making—from local governance to social services—there’s a risk of homogenization. The unique characteristics that define cultural identities might be overshadowed by the algorithms that prioritize efficiency over diversity. This leads to a crucial question: how can we ensure that AI respects and promotes the rich tapestry of human experience?

To navigate these complexities, we must actively engage in discussions about the role of AI in shaping identity. Here are some key considerations:

- Self-Perception: How does AI influence the way we see ourselves?

- Social Roles: In what ways are our societal roles redefined by AI technologies?

- Digital vs. Physical Identity: How do our online personas compare to our real-world selves?

Ultimately, the conversation around AI and identity is not just about technology; it’s about what it means to be human in an increasingly digital world. As we continue to embrace AI, we must strive to maintain our individuality and the richness of our shared human experience.

Posthumanism and AI

As we delve into the realm of posthumanism, it becomes evident that artificial intelligence (AI) is not just a tool but a significant player in redefining our existence. Imagine a world where humans and machines not only coexist but also enhance each other’s capabilities. This vision is not merely science fiction; it’s a reality that is rapidly unfolding. The integration of AI into our lives prompts profound questions about our nature as human beings and the future of our societies.

At its core, posthumanism challenges the traditional boundaries of what it means to be human. In a world where AI can learn, adapt, and even create, the lines between human intelligence and artificial intelligence blur. This raises a pivotal question: What does it mean to be human in a posthuman world? Are we defined by our biological makeup, or is it our cognitive abilities that truly matter? As AI continues to evolve, it invites us to reconsider our identity, pushing us to explore the essence of consciousness and existence.

Furthermore, the implications of AI in a posthuman context extend beyond individual identity to societal structures. As AI systems become integrated into various sectors, such as healthcare, education, and governance, we witness a transformation in how these systems operate. For instance, AI can analyze vast amounts of data to provide insights that were previously unattainable, thereby reshaping decision-making processes. This shift leads us to ponder the following:

- How will AI influence our social interactions?

- What role will human intuition play in a data-driven world?

- Can we trust AI to make ethical decisions on our behalf?

Ultimately, posthumanism and AI together present a fascinating yet complex landscape. They challenge us to embrace change and adapt to a future where our understanding of humanity is continually evolving. The journey ahead is not just about technological advancement; it’s about redefining our place in a world where the lines between human and machine are increasingly indistinct. As we navigate this uncharted territory, we must remain vigilant and thoughtful about the ethical implications and societal impacts of our choices.

Transhumanism vs. Posthumanism

The debate between transhumanism and posthumanism is not just a philosophical exercise; it’s a reflection of our evolving relationship with technology. At its core, transhumanism advocates for the enhancement of the human condition through advanced technologies. Imagine a world where we can augment our physical and cognitive abilities, pushing the boundaries of what it means to be human. This idea paints a picture of a future where humans can transcend their biological limitations, enhancing their lifespan, intelligence, and even emotional well-being.

On the flip side, posthumanism takes a different approach. It challenges the very notion of a fixed human identity. In this perspective, the integration of AI and technology leads to a redefinition of humanity itself. It suggests that as we merge with machines, we might no longer fit the traditional mold of “human.” Instead, we become part of a larger network of intelligence, where distinctions between human and machine blur. This raises intriguing questions: If we can enhance ourselves to the point of becoming something entirely different, what does that mean for our essence?

To illustrate the differences, consider the following table:

| Aspect | Transhumanism | Posthumanism |

|---|---|---|

| Definition | Enhancement of human capabilities | Redefinition of what it means to be human |

| Focus | Technological improvements | Integration with technology |

| Outlook | Optimistic about human enhancement | Critical of human exceptionalism |

Both philosophies offer unique insights into our future, yet they also pose significant ethical dilemmas. For instance, if we embrace transhumanism, who gets access to these enhancements? Will it create a divide between the ‘enhanced’ and ‘natural’ humans? Conversely, posthumanism invites us to consider the implications of losing our distinct identity. Are we ready to let go of what makes us human in favor of a more integrated existence with AI? These questions are not just theoretical; they impact how we approach technology today and shape our societal structures tomorrow.

Future Societal Structures

As we stand on the brink of a technological revolution, the integration of AI into our daily lives is set to redefine our societal structures in profound ways. Imagine a world where machines not only assist us but also shape our communities, economies, and even governance. This isn’t just a dream; it’s becoming our new reality. With AI’s ability to analyze vast amounts of data, we can expect a more efficient and responsive society that adapts to the needs of its citizens.

One of the most exciting prospects is the potential for democratized decision-making. AI can provide insights that empower individuals, allowing for more informed choices at every level. For instance, consider how local governments could utilize AI to gauge public opinion on various issues, ensuring that the voices of all citizens are heard. This could lead to a more participatory democracy, where everyone has a stake in the decisions that affect their lives.

However, the shift towards AI-driven governance also raises questions about accountability and transparency. Who will be responsible for the decisions made by AI systems? This is where the need for a delicate balance comes into play. While AI can enhance efficiency, human oversight remains crucial to ensure ethical standards are upheld. As we navigate this new terrain, society must establish clear guidelines and frameworks to govern AI’s role in decision-making.

Moreover, the economic landscape is poised for transformation. The rise of AI technologies could lead to the emergence of new industries, creating job opportunities that we can’t even imagine today. However, this also means that we must prepare for potential job displacement in traditional sectors. It’s essential to foster a culture of lifelong learning and adaptability, equipping individuals with the skills necessary for the jobs of the future.

In conclusion, the future societal structures influenced by AI will be characterized by a blend of innovation and responsibility. As we embrace these changes, it’s vital for us to remain vigilant, ensuring that technology serves humanity and not the other way around. The journey ahead is filled with possibilities, but it will require us to rethink our roles and responsibilities in a world increasingly shaped by artificial intelligence.

Frequently Asked Questions

- What are the philosophical implications of AI on humanity?

AI challenges our traditional notions of what it means to be human. As machines become more advanced, they blur the lines between human and machine, prompting us to rethink our identity and place in the world.

- How does AI affect ethical considerations?

The rapid development of AI raises significant ethical questions. It forces us to consider the responsibilities of creators and the impact of AI decision-making on society, including issues of fairness and bias.

- Who is responsible for the actions of AI?

This is a complex question! When AI operates independently, accountability becomes murky. We must explore the moral and legal implications of AI’s actions and determine who should be held responsible.

- Is human oversight necessary in AI operations?

Absolutely! While AI can operate autonomously, human oversight is crucial to ensure ethical outcomes. Striking a balance between AI autonomy and necessary human intervention is vital for responsible AI use.

- How does AI influence personal and collective identity?

AI’s integration into our lives prompts a reevaluation of how we see ourselves and our roles in society. It affects our self-perception and challenges the traditional constructs of identity.

- What is the difference between transhumanism and posthumanism?

Transhumanism focuses on enhancing human capabilities through technology, while posthumanism envisions a future where humans and machines coexist. AI plays a significant role in both philosophies, shaping our future.

- How will AI reshape societal structures?

As AI becomes more integrated into society, it will transform governance, the economy, and community dynamics. We can expect new social structures to emerge, influenced by the capabilities of AI technologies.